In physics, the experimenters have been gathering and analyzing vast amounts of data for decades, employing data analysis methods such as Monte Carlo simulations and, more recently, machine learning. However, the philosophical implications of processing the recorded signals and transforming them into measured physical quantities have only recently be recognized (e.g., Beauchemin 2017, Karaca 2018, Leonelli & Tempini 2020; cf. also Falkenburg 2007). Recorded signals are inherently discrete and possess limited spatial and temporal resolutions. They are subject to background contamination, noise, and measurement errors. Their relationship to physical quantities (which are commonly represented by continuous functions) depends on the specific characteristics of the detectors with which the raw data are taken.

If we knew the underlying theoretical function f(x) of a physical quantity and the response function A(x,y) that translates this function into a detectable signal by taking into account all possible physical processes occuring in the detector, we could derive the expected signal g(y) in the presence of a background b(y) by solving a Fredholm integral equation:

g(y) = ∫A(x,y) f(x) dx + b(y)

In fact, physicists face the inverse challenge of determining a theoretical function f(x) from a recorded distribution of detected events g(y). This requires the inversion of the above Fredholm integral equation. From a mathematical point of view, this is an ill-posed problem; therefore its solution is unstable under small variations of the function f(x), which is often not exactly known, and the response function A(x,y), which is never precisely known. Physicsts resort to Monte Carlo simulations in order to determine the response function numerically. This involves calculating the possible particle interactions and their cross-sections in quantum field theory, and simulating individual trajectories from randomly generated initial conditions (Morik & Rhode 2023). The indispensable inclusion of Monte Carlo simulations into experimental practice and data analysis in physics raises new philosophical questions regarding the kind of knowledge gained from them (Beisbart 2012, Boge 2019) and how this affects the trust in experimental results (Boge 2024). Furthermore, each component of the Fredholm integral equation poses its own epistemological challenges. Accordingly, we identify five basic epistemological problems of data analysis in contemporary physics:

1) What can be measured? What is merely derived from the raw data and to what extent is the derivation theory-laden?

2) How can we determine the background and how can we separate it from specific signals? How should we balance purity vs. sensitivity of the signal background separation?

3) What is to be included into the response function A(x,y) (e.g., theoretical knowledge about particle interactions, knowledge about the function and geometry of the detectors)? Which conceptual and practical difficulties arise when simulating what is happening in the detector?

4) What initial assumptions do we make regarding the theoretical function f(x)? How can we ensure that these initial assumptions do not unduly bias the final results?

5) How should we deal with the fact that the inverse problem is ill-posed? How can we prevent that the results crucially depend on precise information about the response function?

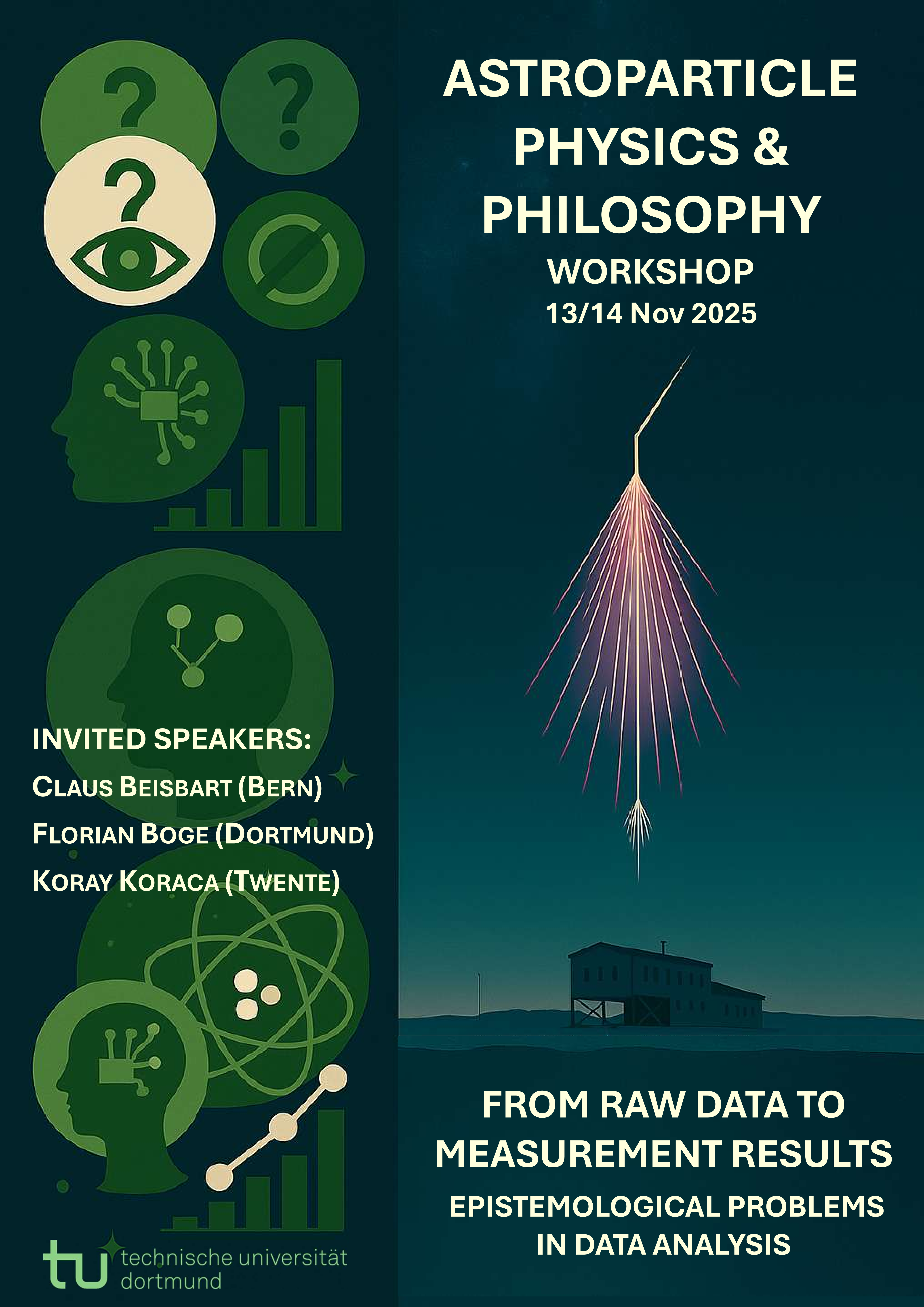

Our workshop seeks to foster interdisciplinary discussions between philosophers, physicists, and computer scientists about these issues. We welcome submissions for short presentations (30 minutes talk plus 15 minutes for discussion).

Please submit abstracts in PDF-format of up to 500 words (references excluded). Submissions should be prepared for blind review. The deadline for submission is September 15th. We will inform you of our decision by September 20th.

Participation in the workshop will be free of charge.

The workshop is part of the research project "Data, Theories, and Scientific Explanation: The Case of Astroparticle Physics" funded by the German Research Foundation (DFG).

https://app.physik.tu-dortmund.de/en/research/philosophy-of-astroparticle-physics/

It is organized in cooperation with the Lamarr Institute for Machine Learning and Artificial Intelligence.

Organizers:

Prof. Dr. Dr. Brigitte Falkenburg, Dr. Johannes Mierau, Prof. Dr. Dr. Wolfgang Rhode

Literature:

Antoniu, A. (2021): What is a data model? An anatomy of data analysis in high energy physics. European Journal for Philosophy of Science 11: 101. https://doi.org/10.1007/s13194-021-00412-2

Beauchemin, P. (2017) Autopsy of measurements with the ATLAS detector at the LHC, Synthese, 194, 275-312.

Beisbart, C. (2012) How can computer simulations produce new knowledge? European Journal for Philosophy of Science, 2(3), 395–434.

Beisbart, C. (2018): Are computer simulations experiments? And if not, how are they related to each other? European Journal for Philosophy of Science 8, 171–204. https://doi.org/10.1007/s13194-017-0181-5

Beisbart, C., & J. Norton (2012): Why Monte Carlo Simulations Are Inferences and Not Experiments. Int. Studies in the Philosophy of Science 26 (4):403-422.

Boge, F.J. (2019) Why computer simulations are not inferences, and in what sense they are experiments? European Journal for Philosophy of Science 9, 13.

Boge, F.J. (2024) Why Trust a Simulation? Models, Parameters, and Robustness in Simulation-Infected Experiments, The British Journal for the Philosophy of Science, 75(4), 843-870.

Falkenburg, B. (2007) Particle Metaphysics. A Critical Account of Subatomic Reality, Heidelberg: Springer.

Falkenburg, B. (2024): Computer simulation in data analysis: A case study from particle physics. In: Studies in History and Philosophy of Science 105, 99–108. https://www.sciencedirect.com/science/article/pii/S0039368124000530?via%3Dihub

Karaca, K. (2013): The Strong and Weak Senses of Theory-Ladenness of Experimentation: Theory-Driven versus Exploratory Experiments in the History of High-Energy Particle Physics. Science in Context 26, 93–136.

Karaca, K. (2018) Lessons from the Large Hadron Collider for model-based experimentation: the concept of a model of data acquisition and the scope of the hierarchy of models, Synthese, 195, 5431-5452.

Leonelli, S. & N. Tempini. (2020) Data Journeys in the Sciences, Cham: Springer.

Morik, K. & W. Rhode. (2023) Machine Learning under Resource Constraints - Discovery in Physics, Berlin/Boston: de Gruyter.